In brief

- Integrating mainframes with hybrid cloud architecture offers the best of both worlds: Mainframe reliability and security, plus cloud scalability and innovation potential

- Although COBOL is considered a legacy system, it supports 80% of in-person credit card transactions, handles 95% of all ATM transactions, and powers systems that generate more than $3 billion USD of commerce each day. Due to superior stability and processing power, it continues to play an integral role in helping businesses maintain apps and programs in existing architectures

- As soon as you increase product depth and complexity or expand corporate retail and wealth management, Java’s economic gain shrinks. The sheer weight of activity that mainframes lift every second, minute, hour, day, week, month and year beats wholesale cloud cost savings hands down. Plus, any expansion of Oracle’s Java licensing is bound to aggravate the TCO issue

What’s so special about cloud-based banking?

The buzz is that, somehow, cloud is a cure for every banking malady. The only remedial game in town, as it were. Granted, migration offers speed, flexibility and convenience. But, equally, it brings threat, danger and complexity.

If you're conducting business on an assurance platform, shouldn’t your primary focus be safety, certainty and simplicity? Customers will be extremely unhappy if crucial data goes walkabout or they can't get at their cash.

Two sides of the same futuristic coin

Clearly, next-gen banking must provide the best of both worlds.

It makes absolute sense to use the best processor — mainframe hybrid cloud — for the most important job. Systems of record work brilliantly on mainframes, and COBOL is the accepted finance lexicon — Java has problems at scale and can't process as efficiently or accurately as COBOL.

Many consider COBOL to be a legacy system. However, “heritage” is a more appropriate descriptor, because the language’s pivotal, orderly and object-oriented configuration is the mainstay for over 40% of digital banking systems today.

Reports of COBOL’s death are greatly exaggerated

The vintage programming language still underwrites 80% of in-person financial services transactions, manages 95% of all ATM activities and processes US$3 trillion of daily commerce. There are over 220 billion lines of code, and 1.5 billion more are written annually. In addition, its matchless stability and processing power enable COBOL to ensure banks maintain apps and programs in existing architectures.

Some commentators suggest that because you have aging engineers, you should use AI to convert all this COBOL code to Java. Well, the simple fact is, you don't know if it will work.

Also, why would anyone advise a bank to convert all their code (most likely below standard) to Java? With Hogan, you can run your existing bank and partition or open up new partitions using Umbrella on the new code. Over time, you can move loans and mortgage types, doing it all in the framework and keeping a common front end.

This is far more effective than deciding, like one institution, to rewrite COBOL to Java and spend $100 million, only to find that it didn't work because it couldn't scale.

Learn on the job

IBM is addressing the age and skill shortage issue by educating a new generation of COBOL engineers. Creating internal academies is a great idea. And a hybrid cloud environment helps you enthuse your future workforce with the thought that they'll work across both mainframes and the latest cloud-native technologies.

However, although this long-term strategy is a powerful solution, trying to change 11 million lines of code to Java or waiting for a new COBOL generation to emerge is unworkable in the immediate term. If you have a time problem, just tackle that head-on — create an academy and bring in the new generation. It's safer, practical and far more cost-effective.

Java’s no match for the heavyweights

As far as I'm aware, no Tier 1/2 banks across retail and corporate have multiple product lines and cards at scale using 20-year-old Java. It's OK for a NEO retail bank that selects 10X, Mambu, Thought Machine and the like, with narrow product lines, small markets and limited customer bases. They can benefit from the extra speed and efficiency cloud running gives you.

But as soon as you decide to increase product depth and complexity or expand into corporate retail and wealth management, the economic gain shrinks. The sheer weight of activity that mainframes lift every second, minute, hour, day, week, month and year beats wholesale cloud cost savings hands down. Plus, any expansion of Oracle’s Java licensing is bound to aggravate the TCO issue.

And, most importantly, once you’ve exited on-prem, backtracking is no walk in the park.

So, what do you actually want to do?

Let’s back up a bit. Question: Do these charts reflect the aims of your management team?

How about customer-centricity?

And digital first?

Not all digital progress is revolutionary.

Hogan clients have always had access to the features shown in the modern platform-based architecture model (above right). How come? Because of the way Hogan is architected with Umbrella. And how clients have used API orchestration tools such as z/OS Connect and MuleSoft. They've always been able to create services.

Yes, hyper-fast delivery is a Banking 4.0 requirement, which means adopting DevOps, modern tools and a specific mindset. But the barrier to front-end speed is internal processes, not Hogan. Some banks can release new code every other week; others have to wait an eternity for the cycle to run its course.

Hogan’s always on

Hogan clients are always on as long as the architecture is set up and used correctly on plan. They can bring partitions up and down for loans and maintenance, then replicate across in real-time.

Another vital requirement of Banking 4.0 is the real-time use of data, and the screening of live operational change data via Hogan is on its way. Soon, once someone opens an account, the information will flow into an analytics database and geopositioned, real-time dashboards will track what monies are moving where and which accounts are opening/closing.

Harnessing exceptional potential

Crucially, banks can leverage new cloud technologies while upgrading existing mainframe applications to work more holistically within a hybrid environment. This also ensures software leverages unique hardware potential, an operation many institutions struggle with.

Shifting to a mainframe hybrid cloud infrastructure means improved support for disparate teams, lower costs, greater control and scalability, sustained agility and innovation, business continuity, tighter security and enhanced risk management.

Composable, modular and layered

Composable, modular, layered and AI-/API-driven, Hogan’s benchmark mainframe hybrid cloud solution takes the best IBM Z solutions and provides blueprints for banks with restrictive cores to be “migrated to a modern platform”.

Source: Celent, “Continuous Digital Transformation in the Cloud”

Hogan reduces latency and increases security by patching cloud-native apps to safety on the zLinux partition inside the same box. That's the mainframe hybrid cloud solution. It gives you essential, real-time, operational data control - a central Banking 4.0 requirement.

Hogan is on a continual development program involving refined user journeys, graphical user interfaces and many other enhancements, collapsing 15 loan inquiry screens into one guided screen, for example. This program dovetails with what IBM and DXC Luxoft are doing with AI on mainframe.

Mainframe AI

Mainframes are the true center of critical business operations, with proven track records for reliability, scalability and processing power. Despite being thought of by some as “old skool”, mainframes can claim unrivalled qualities that make them a perfect fit for modern AI-driven applications. Here are just three reasons why:

- Mainframes have enormous reserves of precious structured/unstructured data that can be used to train AI models. This practice enhances algorithmic potency and authenticity, facilitating better-briefed decision-making and predictive outcomes

- Mainframes are hard-wired to process prodigious quantities of transactions and computational complexities. AI workloads involve fearsome calculations, e.g., training deep learning models or running complex algorithms, and that kind of compute power is indispensable. Banks can expedite training and inference by using mainframe processing strengths to cut time-to-truth

- Mainframe characteristics include heavy-duty security and compliance, essential for handling sensitive data in AI applications. By leveraging mainframes for AI, banks can use the built-in security capabilities to safeguard data privacy, ensure regulatory compliance and fight off cyber threats. IBM’s z16 system is developing quantum-safe cryptography to protect client systems and sensitive data against the potentially harmful use of advanced technologies

Keep cost to a minimum

Diverting AI to the data location is the most logical plan of action. Repurposing mainframes and using existing hardware/software for AI programs enables banks to sidestep heavy infrastructure investment.

A recent IBM and DXC Luxoft webinar about AI on the mainframe showcased a proof of concept that can influence, score and analyze probability. It was carried out on the z15, with a couple of milliseconds increase in latency for millions of transactions. Using a z16 and Telum 1 will be even faster. IBM recently accounted the Telum II, which is likely to be four times faster. Future editions of IBM mainframes will deliver even greater processing power with more quantum-safe applications.

For Tier 1 and 2 banks, their future banking platform is already here.

Mainframe compute power

IBM’s Telum processor is geared toward real-time fraud detection/prevention at scale and evolving scenarios employing deep learning inference. It incorporates on-chip acceleration for AI network training across transactions, a first for IBM. Wall Street Journal’s article "Mainframes Find New Life in AI Era" revealed that the next iteration of the IBM Z mainframe series would include mainstream AI faculties and large language models (LLM).

The idea was that enabling AI applications would rejuvenate mainframe working. This is especially attractive to governments as well as enterprises focused on data privacy and nervous about data storage on public clouds or depending on openly accessible data. A self-contained AI system could solve the issue.

Once, it was fashionable to knock mainframes

BIAN (Banking Industry Architecture Network) is creating new architectural and API frameworks around business domains. Unfortunately, their narrative, and that of analysts, has been heavily skewed because of the fashion of cloud. The emergence of cloud working created its own lopsided reality: “Cloud good, mainframe bad, regardless”.

BIAN provided the introduction - its first three mainframe points being legacy, monolithic, and must be decomposed to hollow out the core. Others have adopted the narrative, so now enterprises are saying, “When are you going to decompose Hogan?”

What? Decompose the best-integrated core banking platform on the planet? You want super-efficient cards, loans, deposits and loyal customers? Well, on-prem working hasn’t stopped the biggest banks in America from buying other banks and integrating them into Hogan, making Hogan the fastest-growing banking product in the United States.

Two or more heads are better than one

Not everyone believes that “everything on the cloud” is the answer. The Digital Operational Resilience Act (DORA) specifically says don't get sucked into relying on a single cloud provider or cybersecurity intermediary. The recent massive global outage proved that in spades. Not one Hogan client missed a heartbeat. Some batch processing was delayed and Windows stymied branch laptops, but cores never lost a thing. No clients had operational issues from running their core to process money.

It’s extremely rare to have a “severity-one” incident (a critical incident with very high impact). And it's never a core issue. We’ve known only one or two Hogan severity-one issues in the past four or five years.

In-place modernization is your best bet

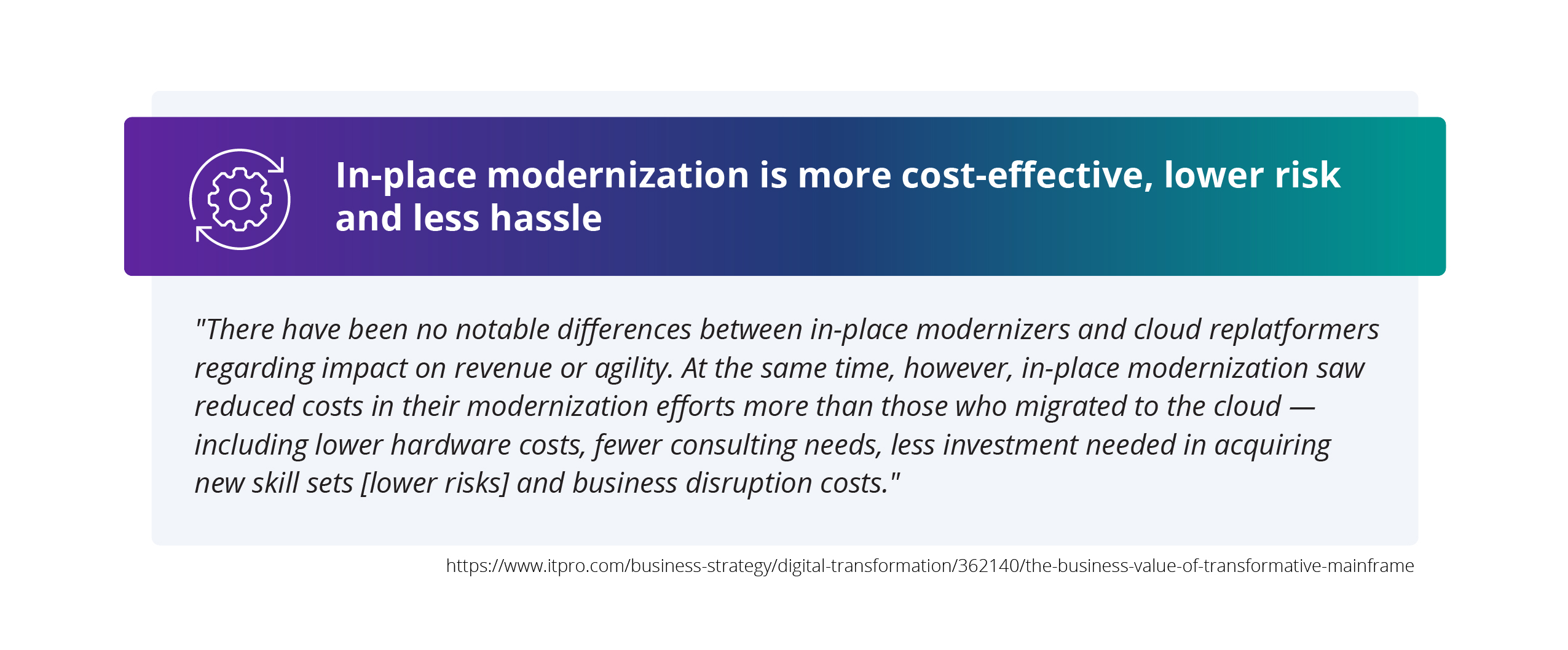

And when it comes to forging ahead and increasing your options, research supports the notion that in-place modernization is a far more effective path for banks.

You could even start a greenfield bank with Hogan, but with all the hype, decision-makers are unlikely to say, naturally, “OK, it's a monolith, a legacy, and so on, but why don’t we start a greenfield bank with Hogan?”

Why not, indeed? Hogan’s pricing beats specific competitors by a factor of 10 because we amortized our product. Low-geared, without a VC breathing down our necks, DXC Luxoft doesn't have to charge 10X and Thought Machine or Mambu prices. Some providers haven’t realized their investment potential at all, and owners must surely be querying when they’ll see a return on their investment. Therefore, ignoring their wholesale cloud adoption, major initiatives could be written off as attempted projects (people tend to change slowly).

Banking 4.0 lists design thinking, technical feasibility and economic viability as particularly desirable characteristics. We’re developing one or two elements, but rest assured, Hogan is a comprehensive, highly feasible and economically viable solution.

Talk to an expert

To learn more about how retaining COBOL and adding AI capabilities in a Hogan-driven mainframe hybrid cloud environment increases security while reducing risk and cost, visit our website. However, if you’d like to dig deeper and discover what Hogan implementation could do for your bank, contact us.